Transformers in machine learning represent a revolutionary architecture that has transformed how computers process sequential data. While sharing the same name as electrical transformers, these artificial intelligence components serve an entirely different purpose in the world of deep learning. This article explains the machine learning transformer architecture, its working principles, and how it differs from traditional sequence processing models.

Understanding the Transformer Architecture

The transformer model was first introduced in the groundbreaking 2017 paper “Attention Is All You Need” by Vaswani et al. This architecture revolutionized natural language processing by eliminating the need for recurrent connections used in earlier models.

Self-Attention Mechanism

The core innovation of transformers is their self-attention mechanism, which allows the model to weigh the importance of different words in a sentence relative to each other. Unlike traditional models that process words sequentially, transformers analyze all words simultaneously while learning their contextual relationships. This parallel processing capability dramatically improves both training efficiency and model performance on long-range dependencies in text.

Positional Encoding

Since transformers don’t process words in sequence, they need another way to understand word order. Positional encoding solves this by adding information about each word’s position in the sequence to its embedding. These encodings use sine and cosine functions of different frequencies, allowing the model to learn position patterns that generalize well to sequences longer than those seen during training.

Key Components of Transformer Models

Transformer architectures consist of several specialized components that work together to process sequential data efficiently.

Encoder-Decoder Structure

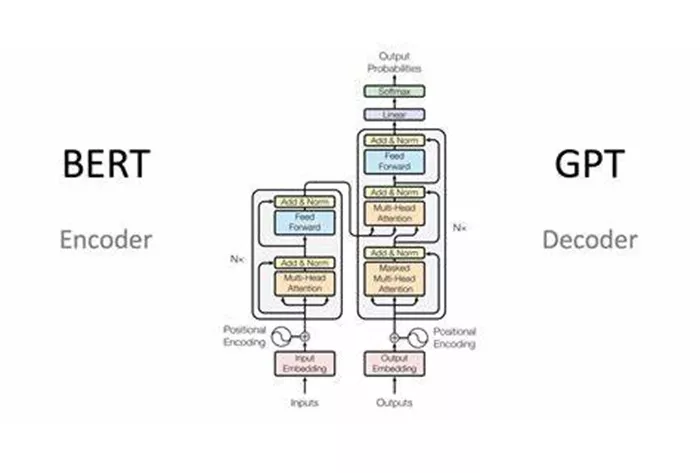

The original transformer features a symmetrical encoder-decoder design. The encoder processes input data and builds rich representations, while the decoder generates output sequences based on these representations. Each encoder and decoder layer contains self-attention mechanisms and feed-forward neural networks, with residual connections and layer normalization stabilizing the training process.

Multi-Head Attention

This mechanism allows the model to focus on different parts of the input simultaneously. Instead of having a single attention function, transformers use multiple attention “heads” that learn different attention patterns. These heads operate in parallel, with their outputs combined to form the final attention result. This design enables the model to capture diverse relationships within the input data.

How Transformers Process Information

The information flow through a transformer model follows a distinct pattern that enables its impressive performance.

Input Embedding Layer

Raw input tokens (typically words or subwords) first pass through an embedding layer that converts them into high-dimensional vector representations. These embeddings capture semantic relationships between words, with similar words having similar vector representations. The embedding layer is trained alongside the rest of the model, allowing it to learn task-specific representations.

Feed-Forward Networks

Each transformer layer contains a position-wise feed-forward network that applies the same neural network to every position in the sequence separately. These networks typically consist of two linear transformations with a ReLU activation in between. Despite their simplicity, they play a crucial role in processing the attention outputs and enabling complex transformations of the sequence representations.

Applications of Transformer Models

Transformer architectures have found widespread use across various domains of artificial intelligence.

Natural Language Processing

Transformers dominate modern NLP applications, powering state-of-the-art systems for machine translation, text summarization, and question answering. Models like BERT use bidirectional transformer encoders to understand context from both directions, while GPT models employ autoregressive transformer decoders for text generation.

Computer Vision

Vision transformers (ViTs) have successfully adapted the architecture for image processing tasks. These models split images into patches that are treated similarly to words in NLP applications. Transformers have shown remarkable performance in image classification, object detection, and even medical image analysis, often outperforming traditional convolutional neural networks.

Advantages Over Previous Architectures

Transformers offer several key benefits that explain their rapid adoption across machine learning.

Parallel Processing Capability

Unlike recurrent networks that process sequences step-by-step, transformers handle all positions in parallel during training. This parallelism enables much faster training on modern hardware, particularly GPUs and TPUs that excel at parallel computations. The ability to process entire sequences simultaneously also improves gradient flow during backpropagation.

Long-Range Dependency Handling

Traditional sequence models struggled with relationships between distant elements in long sequences. Transformers excel at capturing these long-range dependencies thanks to their attention mechanisms that can directly connect any two positions in the sequence, regardless of distance. This makes them particularly effective for documents or conversations where relevant information may be widely separated.

Challenges and Limitations

Despite their strengths, transformer models come with certain drawbacks that researchers continue to address.

Computational Resource Requirements

Transformer models typically require significant memory and processing power, especially for large-scale applications. The self-attention mechanism’s memory requirements grow quadratically with sequence length, making processing of very long sequences challenging. Various techniques like sparse attention and memory-efficient implementations have been developed to mitigate these issues.

Training Data Demands

State-of-the-art transformer models often require massive amounts of training data to achieve their best performance. While pre-training on large datasets followed by fine-tuning on specific tasks helps, the data hunger remains a challenge for domains where labeled data is scarce. Recent work on few-shot and zero-shot learning aims to address this limitation.

Evolution of Transformer Models

Since their introduction, transformers have undergone significant evolution and specialization.

BERT and Bidirectional Models

The BERT architecture introduced bidirectional training for transformers, allowing models to consider both left and right context simultaneously. This innovation led to substantial improvements in understanding tasks where context from both directions is important, such as question answering and sentiment analysis.

GPT and Autoregressive Models

The GPT series demonstrated the power of large-scale autoregressive transformer models for text generation. These models predict the next word in a sequence given all previous words, enabling coherent long-form text generation. Each iteration has shown remarkable improvements in generation quality and task generalization.

Future Directions in Transformer Research

The field continues to evolve rapidly with several promising research directions.

Efficient Transformer Variants

Researchers are developing more efficient transformer architectures to reduce computational costs. Techniques like knowledge distillation, pruning, and quantization aim to maintain performance while decreasing model size and resource requirements. Sparse attention patterns and mixture-of-experts approaches offer additional paths to efficiency.

Multimodal Applications

Recent work explores transformers that can process multiple data types simultaneously, such as text and images. These multimodal models show promise for applications like image captioning, visual question answering, and cross-modal retrieval, potentially leading to more versatile AI systems.

Implementing Transformer Models

Practical implementation of transformers has become increasingly accessible.

Open-Source Frameworks

Libraries like Hugging Face’s Transformers provide pre-trained models and easy-to-use interfaces for implementing transformer-based solutions. These resources have democratized access to state-of-the-art NLP capabilities, enabling rapid development of transformer applications across industries.

Transfer Learning Approaches

The transformer paradigm has proven exceptionally suitable for transfer learning. Pre-training on large general datasets followed by fine-tuning on specific tasks allows organizations to leverage powerful models without the computational cost of training from scratch. This approach has become standard practice in many NLP applications.

Comparing Transformers to Other Architectures

Understanding how transformers differ from alternative approaches helps clarify their strengths.

Versus Recurrent Neural Networks

Unlike RNNs that process sequences sequentially, transformers analyze entire sequences at once. This parallel processing eliminates the vanishing gradient problem that plagued earlier sequence models and allows for more effective learning of long-range dependencies.

Versus Convolutional Networks

While CNNs excel at capturing local patterns through their sliding window approach, transformers can learn global relationships directly through attention. Vision transformers have shown that this global perspective can outperform CNNs on certain image tasks, though hybrid approaches often combine the strengths of both architectures.

Transformer Model Optimization

Achieving peak performance requires careful tuning of transformer models.

Learning Rate Scheduling

Transformers typically benefit from specialized learning rate schedules that warm up gradually before decaying. This approach helps stabilize early training when the model’s parameters are changing rapidly. The original transformer paper introduced a novel schedule that has become widely adopted.

Regularization Techniques

Various regularization methods help prevent overfitting in transformer models. Dropout is applied to attention weights and feed-forward layers, while weight decay helps control parameter growth. Layer normalization plays a crucial role in stabilizing training across the deep transformer architecture.

Ethical Considerations in Transformer Use

The power of transformer models comes with important responsibilities.

Bias and Fairness

Like all machine learning models, transformers can inherit and amplify biases present in their training data. Careful dataset curation, bias mitigation techniques, and fairness evaluations are essential when deploying transformer models in sensitive applications.

Environmental Impact

The computational resources required to train large transformer models raise environmental concerns. Researchers are developing more efficient training methods and advocating for responsible use of computing resources to mitigate the carbon footprint of transformer-based AI systems.

Conclusion

Transformers in machine learning have fundamentally changed how we approach sequence processing tasks across multiple domains. Their unique architecture, centered on attention mechanisms, offers unparalleled capabilities in understanding and generating sequential data. While challenges remain in terms of efficiency and resource requirements, ongoing research continues to push the boundaries of what’s possible with transformer models. As the field evolves, transformers will likely play an increasingly central role in artificial intelligence applications, driving innovations in natural language understanding, computer vision, and beyond. Understanding these powerful models is essential for anyone working in modern machine learning.

Related Topics: