The term “transformer” carries different meanings across engineering disciplines. While electrical engineers associate it with power distribution equipment, computer scientists recognize it as a revolutionary deep learning architecture. This article explores transformers in artificial intelligence, explaining their structure, function, and impact while drawing insightful parallels with their electrical counterparts to enhance understanding.

The Dual Meaning of Transformers

Transformers exist in two fundamentally different domains. Electrical transformers have been essential components in power systems since the late 19th century, using electromagnetic induction to transfer energy between circuits. In contrast, deep learning transformers emerged in 2017 as a novel neural network architecture that processes sequential data through attention mechanisms rather than recurrent connections.

Historical Context

The development path of these two technologies spans different centuries. Electrical transformers trace their origins to the pioneering work of Faraday and Tesla, enabling efficient AC power transmission. AI transformers represent the culmination of decades of neural network research, achieving breakthroughs in natural language understanding.

Core Architecture of Deep Learning Transformers

The transformer model introduced in the landmark paper “Attention Is All You Need” revolutionized how machines process sequential data. Its architecture differs fundamentally from previous recurrent or convolutional approaches through several key innovations.

Self-Attention Mechanism

The self-attention mechanism allows the model to dynamically determine the importance of different elements in input data. For text processing, this means each word can attend to all other words in the sentence, creating a sophisticated web of contextual relationships. This mechanism solves the limitation of traditional RNNs that process words sequentially with limited memory.

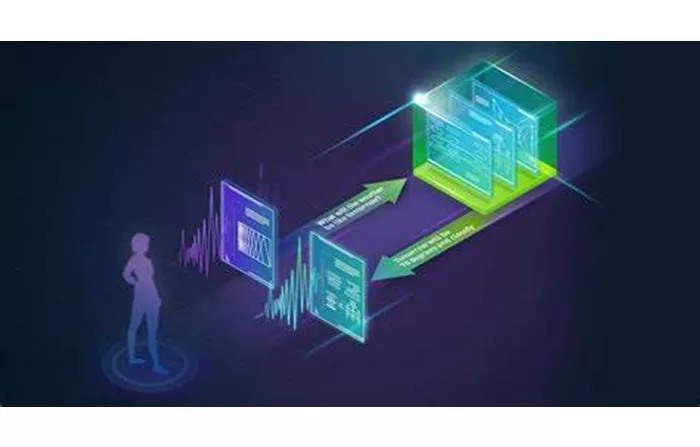

Encoder-Decoder Structure

Transformers typically employ a dual structure where an encoder processes input data and a decoder generates output. The encoder stacks multiple identical layers that each perform self-attention and feed-forward operations, while the decoder adds cross-attention to incorporate encoder outputs during generation tasks.

Positional Encoding

Unlike RNNs that inherently understand sequence order, transformers require explicit positional information. Engineers implement this through positional encoding, which injects information about each element’s position in the sequence using sinusoidal functions or learned embeddings.

Comparing Electrical and AI Transformers

While serving vastly different purposes, both types of transformers share conceptual parallels in their transformation functions.

Energy Transformation vs Information Transformation

Electrical transformers modify voltage and current levels to enable efficient power transfer across circuits. Similarly, AI transformers modify information representations, transforming input data through successive layers of attention and neural processing to extract meaningful patterns.

Efficiency Considerations

Both systems prioritize efficiency in their respective domains. Electrical transformers minimize energy losses through careful core design and material selection, while AI transformers optimize computational efficiency through parallel processing and attention mechanisms that reduce unnecessary operations.

Practical Applications of Deep Learning Transformers

The versatility of transformer architecture has led to widespread adoption across multiple AI domains, demonstrating superior performance in numerous tasks.

Natural Language Processing

Transformers dominate modern NLP applications including machine translation where they outperform previous statistical and neural approaches. They enable contextual understanding that allows for more accurate interpretation of polysemous words and complex sentence structures.

Computer Vision Adaptation

The success in language processing inspired computer vision applications through architectures like Vision Transformers (ViT). These models divide images into patches processed similarly to text tokens, achieving state-of-the-art results in image classification and object detection tasks.

Multimodal Systems

Advanced systems now combine transformer architectures for processing multiple data types simultaneously. These multimodal models can jointly analyze text, images, and audio, enabling applications like automatic video captioning and visual question answering.

Technical Advantages Over Previous Architectures

Transformers offer several fundamental improvements that explain their rapid adoption and success in AI applications.

Parallel Processing Capability

Unlike sequential RNN processing, transformers compute all sequence elements simultaneously. This parallelization dramatically accelerates training and inference, particularly when implemented on modern GPU and TPU hardware.

Long-Range Dependency Handling

The attention mechanism provides direct connections between all sequence elements regardless of distance. This solves the vanishing gradient problem that limited RNN effectiveness with long sequences, enabling better modeling of document-level context.

Scalability Characteristics

Transformer architectures demonstrate remarkable scaling properties. Performance consistently improves with increased model size and training data, leading to the development of foundation models with hundreds of billions of parameters.

Challenges and Limitations

Despite their advantages, transformer models present several significant challenges that researchers continue to address.

Computational Resource Requirements

Training large transformer models demands substantial computational resources, raising concerns about energy consumption and environmental impact. A single training run for models like GPT-3 can consume millions of kilowatt-hours.

Memory Constraints

The self-attention mechanism’s memory requirements grow quadratically with sequence length, limiting practical application for extremely long documents or high-resolution images without specialized modifications.

Future Development Directions

Ongoing research seeks to address current limitations while expanding transformer capabilities into new domains.

Efficiency Improvements

New architectures like sparse transformers and mixture-of-experts models aim to maintain performance while reducing computational overhead. Knowledge distillation techniques enable smaller models to retain capabilities of larger ones.

Enhanced Interpretability

Researchers are developing methods to better understand attention patterns and decision processes within transformer models, crucial for applications requiring explainability like medical diagnosis or legal analysis.

Conclusion

Transformers in deep learning represent a fundamental architectural breakthrough that has transformed artificial intelligence capabilities. Their ability to process sequential data through attention mechanisms has produced remarkable advances in language understanding, computer vision, and multimodal applications. While sharing only conceptual similarities with electrical transformers, both technologies demonstrate the power of effective transformation – whether of electrical energy or information representations. As research continues to address current limitations, transformer-based models will likely remain central to AI advancement, driving innovations that reshape how machines understand and interact with our world.

The parallel evolution of these two transformer technologies – separated by over a century yet united by their transformative nature – offers a fascinating case study in engineering innovation across different domains. Understanding both contexts enriches our appreciation of each technological achievement and its broader implications.

Related Topics: